About the Hackathon

# Overview

Pride yourself on your machine learning capabilities and evaluation chops?

Here’s your chance to flex those muscles, anon.

Create a machine learning or metrics-based model that produces 15,000 weights indicating the contribution of open-source repositories to the Ethereum universe.

The models that best align with juror rankings for a subset of these dependencies will get to allocate $170,000 to approximately 5,000 open-source repositories—and win prizes worth $50,000 from Vitalik Buterin!

For more details or if you have any questions, see the resources below:

- For competition related questions, please join the [Pond Developer Group Chat](https://t.me/+-_mVdbexcIFlMDI1)

- To contact the initiator of the competition, join the [DeepFunding Telegram](https://t.me/AgentAllocators/1) group

- Explore the [DeepFunding](https://www.deepfunding.org/) website, and visit the forum discussion page where you can share your models.

Check out the video below for a quick introduction.

# Timeline

### **This contest is open for three months, from February 10th to May 10th.**

# Objective

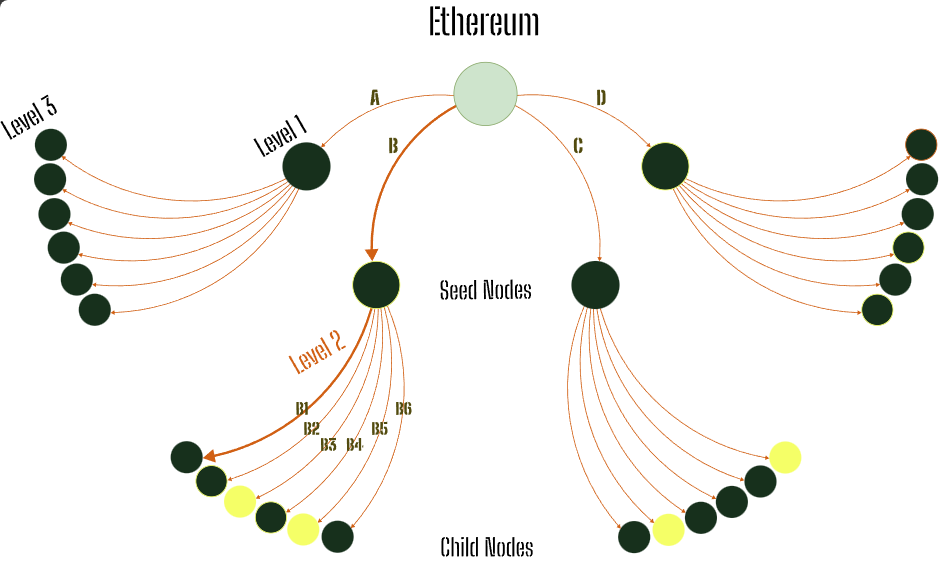

Given approximately 5,000 projects (represented by their GitHub repositories) and the dependencies among them, your task is to **measure the relative contribution of each dependency (source node) to its dependent (target node).** You’re free to approach this challenge however you see fit—from feature engineering to model selection and optimization—and you are encouraged to incorporate external datasets as well.

Jurors will conduct random spot checks to answer questions such as:

- “Which repo, A or B, has been more valuable to Ethereum’s success?”

- “Between B1 and B2, which has contributed more to B?”

- “How much of B’s value comes from B itself vs. from its dependencies?”

Submissions receive higher scores if they align more closely with these spot checks. A detailed evaluation method is provided at [Cryptopond - Overview](https://cryptopond.xyz/modelfactory/detail/2564617)

# **Datasets**

Dataset can be downloaded at [Cryptopond - Dataset](https://cryptopond.xyz/modelfactory/detail/2564617?tab=1)

You may use any external datasets you find helpful for measuring contributions. Below are the datasets and evaluation procedures provided in this contest:

- **Human Jury Votes:** Model submissions will be tested against a human jury’s votes on which repository contributes more value to the ecosystem.

- **Ongoing Data Collection:** Jury data will be collected on a rolling basis throughout the competition. Some jurors are chosen via a nomination tree, where each juror makes 30 comparisons and nominates two additional jurors. Other jurors are invited based on their expertise with specific seed nodes and dependencies.

- **Data Splits:** To prevent "mutual information" from limiting generalizability, jury data is divided into a Training set (publicly available for model training) and a Test set (kept private for evaluation).

- **Leaderboard Updates:** As new jury data becomes available, the leaderboards will be updated periodically to reflect each model's performance against the latest data.

# Evaluation and Submission

**All the submission and evaluation should be done at Cryptopond's competition page here:**

[Quantifying Contributions of Open Source Projects to the Ethereum Universe](https://cryptopond.xyz/modelfactory/detail/2564617?tab=0)

After successfully submit your model result, you can see your score and your position at the [leaderboard](https://cryptopond.xyz/modelfactory/detail/2564617?tab=2).